Does It Matter if an AI Chatbot Cites Its Sources?

Our new study reveals how different citation types affect the trustworthiness of AI-generated answers.

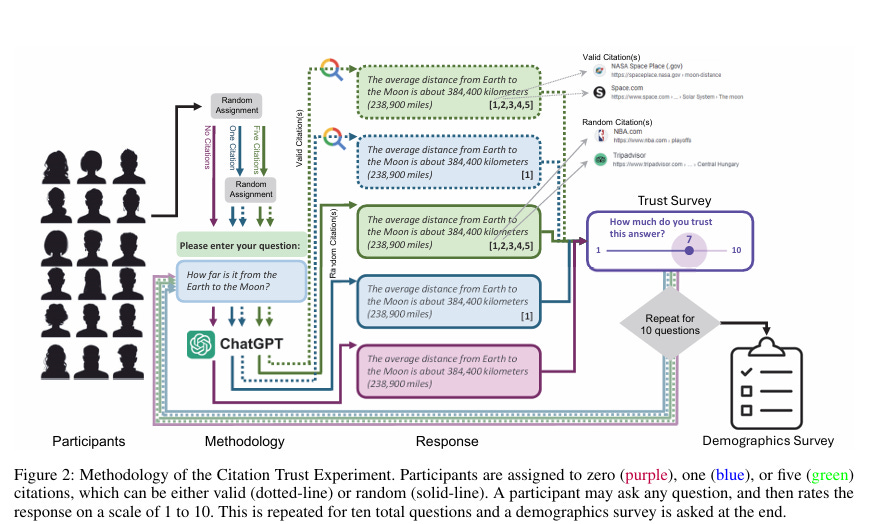

My colleagues and I recently conducted a study that evaluated how citations in AI-generated answers influence user trust. Our study was recently accepted into the 39th Annual AAAI Conference on Artificial Intelligence and the full PDF is freely available online. Below is a figure that outlines our experimental procedure.

Methods

Our participants completed our study online and asked 10 questions into our AI chatbot. The participants were randomly assigned in 1 of 3 conditions where they saw 0, 1, or 5 citations for each answer the chatbot gave them. Half of the participants in the 1 and 5 citation conditions had accurate citations (e.g. NASA.gov for a question about space) and the other half had random citations (e.g. nascar.com for a question about space). Participants had to hover their mouse over the citation to check it.

Let’s dive into our results!

The Power of Citations

We discovered that citations significantly boost trust in AI-generated answers, even when the citations are random. Citations act as "social proof," signaling credibility to users, even if they don’t always verify the sources.

Citations vs. No Citations: Responses with citations were consistently rated as more trustworthy compared to those without.

Random Citations: Trust was lower when citations were random, but they still outperformed responses with no citations.

How Many Citations Are Enough?

The number of citations didn’t matter as much as their presence. Whether an answer had one citation or five, trust levels remained similar. This suggests that a single, well-chosen citation can be just as effective as multiple references in building confidence.

Trust and Citation Checking

When participants checked the citations (hovering the mouse over the link and seeing citation), then it often correlated with lower trust. This aligns with the "anti-monitoring" theory of trust—when users trust an entity, they feel less need to verify it.

Participants who checked citations were likely skeptical from the start, and finding irrelevant or incorrect references further eroded trust.

Random citations, when checked, were rated as no more trustworthy than responses without citations at all.

Question Types Matter

We also checked if the type of question asked (by broad topic) predicted perceived trustworthiness in the answer.

Political and Factual Questions: These were rated as more trustworthy, possibly because users were more familiar with the topics or aligned with their beliefs.

Complex Questions: More intricate questions were trusted slightly less, likely because they require the AI to infer more, leading to less confidence in the answers.

What This Means for AI Chatbots

This study highlights the balance and importance of transparency, accuracy, and user perception:

Citations can significantly enhance trust, but they must be relevant and accurate to avoid skepticism.

A single, high-quality citation may be sufficient to boost credibility.

Trust in AI systems is nuanced and depends on user behavior, question type, and expectations.

Final Thoughts

As AI continues to integrate into our daily lives, understanding what fosters or undermines trust is critical. Including citations in AI-generated answers can go a long way, but designers must ensure those citations are accurate and meaningful. I’m working on a few more studies about how people interact with and perceive information from AI chatbots. I’m looking forward to sharing the results when they are ready!

TY for this very interesting study. As a retired librarian I’m very interested in AI responses but I have not done any exploration of citations. Meanwhile I continue to annoy friends & family who have WONDERFUL, MIND-BLOWING information, by saying calmly, “Yes, but what’s your SOURCE?!” I’ve seen a few of your newsletters, TY, but this was the one that converted me to paid. 🙂